Merge branch 'main-fix' into main

@@ -101,7 +101,7 @@

|

|||||||

|

|

||||||

<div align="center">

|

<div align="center">

|

||||||

<a href="https://www.bilibili.com/video/BV1amAneGE3P" target="_blank">

|

<a href="https://www.bilibili.com/video/BV1amAneGE3P" target="_blank">

|

||||||

<img src="docs/video.png" width="300" alt="麦麦演示视频">

|

<img src="docs/pic/video.png" width="300" alt="麦麦演示视频">

|

||||||

<br>

|

<br>

|

||||||

👆 点击观看麦麦演示视频 👆

|

👆 点击观看麦麦演示视频 👆

|

||||||

|

|

||||||

@@ -149,6 +149,8 @@ MaiMBot是一个开源项目,我们非常欢迎你的参与。你的贡献,

|

|||||||

|

|

||||||

- [📦 Linux 手动部署指南 ](docs/manual_deploy_linux.md)

|

- [📦 Linux 手动部署指南 ](docs/manual_deploy_linux.md)

|

||||||

|

|

||||||

|

- [📦 macOS 手动部署指南 ](docs/manual_deploy_macos.md)

|

||||||

|

|

||||||

如果你不知道Docker是什么,建议寻找相关教程或使用手动部署 **(现在不建议使用docker,更新慢,可能不适配)**

|

如果你不知道Docker是什么,建议寻找相关教程或使用手动部署 **(现在不建议使用docker,更新慢,可能不适配)**

|

||||||

|

|

||||||

- [🐳 Docker部署指南](docs/docker_deploy.md)

|

- [🐳 Docker部署指南](docs/docker_deploy.md)

|

||||||

|

|||||||

14

bot.py

@@ -204,8 +204,8 @@ def check_eula():

|

|||||||

eula_confirmed = True

|

eula_confirmed = True

|

||||||

eula_updated = False

|

eula_updated = False

|

||||||

if eula_new_hash == os.getenv("EULA_AGREE"):

|

if eula_new_hash == os.getenv("EULA_AGREE"):

|

||||||

eula_confirmed = True

|

eula_confirmed = True

|

||||||

eula_updated = False

|

eula_updated = False

|

||||||

|

|

||||||

# 检查隐私条款确认文件是否存在

|

# 检查隐私条款确认文件是否存在

|

||||||

if privacy_confirm_file.exists():

|

if privacy_confirm_file.exists():

|

||||||

@@ -214,14 +214,16 @@ def check_eula():

|

|||||||

if privacy_new_hash == confirmed_content:

|

if privacy_new_hash == confirmed_content:

|

||||||

privacy_confirmed = True

|

privacy_confirmed = True

|

||||||

privacy_updated = False

|

privacy_updated = False

|

||||||

if privacy_new_hash == os.getenv("PRIVACY_AGREE"):

|

if privacy_new_hash == os.getenv("PRIVACY_AGREE"):

|

||||||

privacy_confirmed = True

|

privacy_confirmed = True

|

||||||

privacy_updated = False

|

privacy_updated = False

|

||||||

|

|

||||||

# 如果EULA或隐私条款有更新,提示用户重新确认

|

# 如果EULA或隐私条款有更新,提示用户重新确认

|

||||||

if eula_updated or privacy_updated:

|

if eula_updated or privacy_updated:

|

||||||

print("EULA或隐私条款内容已更新,请在阅读后重新确认,继续运行视为同意更新后的以上两款协议")

|

print("EULA或隐私条款内容已更新,请在阅读后重新确认,继续运行视为同意更新后的以上两款协议")

|

||||||

print(f'输入"同意"或"confirmed"或设置环境变量"EULA_AGREE={eula_new_hash}"和"PRIVACY_AGREE={privacy_new_hash}"继续运行')

|

print(

|

||||||

|

f'输入"同意"或"confirmed"或设置环境变量"EULA_AGREE={eula_new_hash}"和"PRIVACY_AGREE={privacy_new_hash}"继续运行'

|

||||||

|

)

|

||||||

while True:

|

while True:

|

||||||

user_input = input().strip().lower()

|

user_input = input().strip().lower()

|

||||||

if user_input in ["同意", "confirmed"]:

|

if user_input in ["同意", "confirmed"]:

|

||||||

|

|||||||

@@ -10,7 +10,7 @@

|

|||||||

|

|

||||||

- 为什么显示:"缺失必要的API KEY" ❓

|

- 为什么显示:"缺失必要的API KEY" ❓

|

||||||

|

|

||||||

<img src="API_KEY.png" width=650>

|

<img src="./pic/API_KEY.png" width=650>

|

||||||

|

|

||||||

>你需要在 [Silicon Flow Api](https://cloud.siliconflow.cn/account/ak) 网站上注册一个账号,然后点击这个链接打开API KEY获取页面。

|

>你需要在 [Silicon Flow Api](https://cloud.siliconflow.cn/account/ak) 网站上注册一个账号,然后点击这个链接打开API KEY获取页面。

|

||||||

>

|

>

|

||||||

@@ -41,19 +41,19 @@

|

|||||||

|

|

||||||

>打开你的MongoDB Compass软件,你会在左上角看到这样的一个界面:

|

>打开你的MongoDB Compass软件,你会在左上角看到这样的一个界面:

|

||||||

>

|

>

|

||||||

><img src="MONGO_DB_0.png" width=250>

|

><img src="./pic/MONGO_DB_0.png" width=250>

|

||||||

>

|

>

|

||||||

><br>

|

><br>

|

||||||

>

|

>

|

||||||

>点击 "CONNECT" 之后,点击展开 MegBot 标签栏

|

>点击 "CONNECT" 之后,点击展开 MegBot 标签栏

|

||||||

>

|

>

|

||||||

><img src="MONGO_DB_1.png" width=250>

|

><img src="./pic/MONGO_DB_1.png" width=250>

|

||||||

>

|

>

|

||||||

><br>

|

><br>

|

||||||

>

|

>

|

||||||

>点进 "emoji" 再点击 "DELETE" 删掉所有条目,如图所示

|

>点进 "emoji" 再点击 "DELETE" 删掉所有条目,如图所示

|

||||||

>

|

>

|

||||||

><img src="MONGO_DB_2.png" width=450>

|

><img src="./pic/MONGO_DB_2.png" width=450>

|

||||||

>

|

>

|

||||||

><br>

|

><br>

|

||||||

>

|

>

|

||||||

|

|||||||

@@ -147,9 +147,7 @@ enable_check = false # 是否要检查表情包是不是合适的喵

|

|||||||

check_prompt = "符合公序良俗" # 检查表情包的标准呢

|

check_prompt = "符合公序良俗" # 检查表情包的标准呢

|

||||||

|

|

||||||

[others]

|

[others]

|

||||||

enable_advance_output = true # 是否要显示更多的运行信息呢

|

|

||||||

enable_kuuki_read = true # 让机器人能够"察言观色"喵

|

enable_kuuki_read = true # 让机器人能够"察言观色"喵

|

||||||

enable_debug_output = false # 是否启用调试输出喵

|

|

||||||

enable_friend_chat = false # 是否启用好友聊天喵

|

enable_friend_chat = false # 是否启用好友聊天喵

|

||||||

|

|

||||||

[groups]

|

[groups]

|

||||||

|

|||||||

@@ -115,9 +115,7 @@ talk_frequency_down = [] # 降低回复频率的群号

|

|||||||

ban_user_id = [] # 禁止回复的用户QQ号

|

ban_user_id = [] # 禁止回复的用户QQ号

|

||||||

|

|

||||||

[others]

|

[others]

|

||||||

enable_advance_output = true # 是否启用高级输出

|

|

||||||

enable_kuuki_read = true # 是否启用读空气功能

|

enable_kuuki_read = true # 是否启用读空气功能

|

||||||

enable_debug_output = false # 是否启用调试输出

|

|

||||||

enable_friend_chat = false # 是否启用好友聊天

|

enable_friend_chat = false # 是否启用好友聊天

|

||||||

|

|

||||||

# 模型配置

|

# 模型配置

|

||||||

|

|||||||

@@ -1,48 +1,51 @@

|

|||||||

# 面向纯新手的Linux服务器麦麦部署指南

|

# 面向纯新手的Linux服务器麦麦部署指南

|

||||||

|

|

||||||

## 你得先有一个服务器

|

|

||||||

|

|

||||||

为了能使麦麦在你的电脑关机之后还能运行,你需要一台不间断开机的主机,也就是我们常说的服务器。

|

## 事前准备

|

||||||

|

为了能使麦麦不间断的运行,你需要一台一直开着的主机。

|

||||||

|

|

||||||

|

### 如果你想购买服务器

|

||||||

华为云、阿里云、腾讯云等等都是在国内可以选择的选择。

|

华为云、阿里云、腾讯云等等都是在国内可以选择的选择。

|

||||||

|

|

||||||

你可以去租一台最低配置的就足敷需要了,按月租大概十几块钱就能租到了。

|

租一台最低配置的就足敷需要了,按月租大概十几块钱就能租到了。

|

||||||

|

|

||||||

我们假设你已经租好了一台Linux架构的云服务器。我用的是阿里云ubuntu24.04,其他的原理相似。

|

### 如果你不想购买服务器

|

||||||

|

你可以准备一台可以一直开着的电脑/主机,只需要保证能够正常访问互联网即可

|

||||||

|

|

||||||

|

我们假设你已经有了一台Linux架构的服务器。举例使用的是Ubuntu24.04,其他的原理相似。

|

||||||

|

|

||||||

## 0.我们就从零开始吧

|

## 0.我们就从零开始吧

|

||||||

|

|

||||||

### 网络问题

|

### 网络问题

|

||||||

|

|

||||||

为访问github相关界面,推荐去下一款加速器,新手可以试试watttoolkit。

|

为访问Github相关界面,推荐去下一款加速器,新手可以试试[Watt Toolkit](https://gitee.com/rmbgame/SteamTools/releases/latest)。

|

||||||

|

|

||||||

### 安装包下载

|

### 安装包下载

|

||||||

|

|

||||||

#### MongoDB

|

#### MongoDB

|

||||||

|

进入[MongoDB下载页](https://www.mongodb.com/try/download/community-kubernetes-operator),并选择版本

|

||||||

|

|

||||||

对于ubuntu24.04 x86来说是这个:

|

以Ubuntu24.04 x86为例,保持如图所示选项,点击`Download`即可,如果是其他系统,请在`Platform`中自行选择:

|

||||||

|

|

||||||

https://repo.mongodb.org/apt/ubuntu/dists/noble/mongodb-org/8.0/multiverse/binary-amd64/mongodb-org-server_8.0.5_amd64.deb

|

|

||||||

|

|

||||||

如果不是就在这里自行选择对应版本

|

|

||||||

|

|

||||||

https://www.mongodb.com/try/download/community-kubernetes-operator

|

不想使用上述方式?你也可以参考[官方文档](https://www.mongodb.com/zh-cn/docs/manual/administration/install-on-linux/#std-label-install-mdb-community-edition-linux)进行安装,进入后选择自己的系统版本即可

|

||||||

|

|

||||||

#### Napcat

|

#### QQ(可选)/Napcat

|

||||||

|

*如果你使用Napcat的脚本安装,可以忽略此步*

|

||||||

在这里选择对应版本。

|

访问https://github.com/NapNeko/NapCatQQ/releases/latest

|

||||||

|

在图中所示区域可以找到QQ的下载链接,选择对应版本下载即可

|

||||||

https://github.com/NapNeko/NapCatQQ/releases/tag/v4.6.7

|

从这里下载,可以保证你下载到的QQ版本兼容最新版Napcat

|

||||||

|

|

||||||

对于ubuntu24.04 x86来说是这个:

|

如果你不想使用Napcat的脚本安装,还需参考[Napcat-Linux手动安装](https://www.napcat.wiki/guide/boot/Shell-Linux-SemiAuto)

|

||||||

|

|

||||||

https://dldir1.qq.com/qqfile/qq/QQNT/ee4bd910/linuxqq_3.2.16-32793_amd64.deb

|

|

||||||

|

|

||||||

#### 麦麦

|

#### 麦麦

|

||||||

|

|

||||||

https://github.com/SengokuCola/MaiMBot/archive/refs/tags/0.5.8-alpha.zip

|

先打开https://github.com/MaiM-with-u/MaiBot/releases

|

||||||

|

往下滑找到这个

|

||||||

下载这个官方压缩包。

|

|

||||||

|

下载箭头所指这个压缩包。

|

||||||

|

|

||||||

### 路径

|

### 路径

|

||||||

|

|

||||||

@@ -53,10 +56,10 @@ https://github.com/SengokuCola/MaiMBot/archive/refs/tags/0.5.8-alpha.zip

|

|||||||

```

|

```

|

||||||

moi

|

moi

|

||||||

└─ mai

|

└─ mai

|

||||||

├─ linuxqq_3.2.16-32793_amd64.deb

|

├─ linuxqq_3.2.16-32793_amd64.deb # linuxqq安装包

|

||||||

├─ mongodb-org-server_8.0.5_amd64.deb

|

├─ mongodb-org-server_8.0.5_amd64.deb # MongoDB的安装包

|

||||||

└─ bot

|

└─ bot

|

||||||

└─ MaiMBot-0.5.8-alpha.zip

|

└─ MaiMBot-0.5.8-alpha.zip # 麦麦的压缩包

|

||||||

```

|

```

|

||||||

|

|

||||||

### 网络

|

### 网络

|

||||||

@@ -69,7 +72,7 @@ moi

|

|||||||

|

|

||||||

## 2. Python的安装

|

## 2. Python的安装

|

||||||

|

|

||||||

- 导入 Python 的稳定版 PPA:

|

- 导入 Python 的稳定版 PPA(Ubuntu需执行此步,Debian可忽略):

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

sudo add-apt-repository ppa:deadsnakes/ppa

|

sudo add-apt-repository ppa:deadsnakes/ppa

|

||||||

@@ -92,6 +95,11 @@ sudo apt install python3.12

|

|||||||

```bash

|

```bash

|

||||||

python3.12 --version

|

python3.12 --version

|

||||||

```

|

```

|

||||||

|

- (可选)更新替代方案,设置 python3.12 为默认的 python3 版本:

|

||||||

|

```bash

|

||||||

|

sudo update-alternatives --install /usr/bin/python3 python3 /usr/bin/python3.12 1

|

||||||

|

sudo update-alternatives --config python3

|

||||||

|

```

|

||||||

|

|

||||||

- 在「终端」中,执行以下命令安装 pip:

|

- 在「终端」中,执行以下命令安装 pip:

|

||||||

|

|

||||||

@@ -141,23 +149,17 @@ systemctl status mongod #通过这条指令检查运行状态

|

|||||||

sudo systemctl enable mongod

|

sudo systemctl enable mongod

|

||||||

```

|

```

|

||||||

|

|

||||||

## 5.napcat的安装

|

## 5.Napcat的安装

|

||||||

|

|

||||||

``` bash

|

``` bash

|

||||||

|

# 该脚本适用于支持Ubuntu 20+/Debian 10+/Centos9

|

||||||

curl -o napcat.sh https://nclatest.znin.net/NapNeko/NapCat-Installer/main/script/install.sh && sudo bash napcat.sh

|

curl -o napcat.sh https://nclatest.znin.net/NapNeko/NapCat-Installer/main/script/install.sh && sudo bash napcat.sh

|

||||||

```

|

```

|

||||||

|

执行后,脚本会自动帮你部署好QQ及Napcat

|

||||||

上面的不行试试下面的

|

|

||||||

|

|

||||||

``` bash

|

|

||||||

dpkg -i linuxqq_3.2.16-32793_amd64.deb

|

|

||||||

apt-get install -f

|

|

||||||

dpkg -i linuxqq_3.2.16-32793_amd64.deb

|

|

||||||

```

|

|

||||||

|

|

||||||

成功的标志是输入``` napcat ```出来炫酷的彩虹色界面

|

成功的标志是输入``` napcat ```出来炫酷的彩虹色界面

|

||||||

|

|

||||||

## 6.napcat的运行

|

## 6.Napcat的运行

|

||||||

|

|

||||||

此时你就可以根据提示在```napcat```里面登录你的QQ号了。

|

此时你就可以根据提示在```napcat```里面登录你的QQ号了。

|

||||||

|

|

||||||

@@ -170,6 +172,13 @@ napcat status #检查运行状态

|

|||||||

|

|

||||||

```http://<你服务器的公网IP>:6099/webui?token=napcat```

|

```http://<你服务器的公网IP>:6099/webui?token=napcat```

|

||||||

|

|

||||||

|

如果你部署在自己的电脑上:

|

||||||

|

```http://127.0.0.1:6099/webui?token=napcat```

|

||||||

|

|

||||||

|

> [!WARNING]

|

||||||

|

> 如果你的麦麦部署在公网,请**务必**修改Napcat的默认密码

|

||||||

|

|

||||||

|

|

||||||

第一次是这个,后续改了密码之后token就会对应修改。你也可以使用```napcat log <你的QQ号>```来查看webui地址。把里面的```127.0.0.1```改成<你服务器的公网IP>即可。

|

第一次是这个,后续改了密码之后token就会对应修改。你也可以使用```napcat log <你的QQ号>```来查看webui地址。把里面的```127.0.0.1```改成<你服务器的公网IP>即可。

|

||||||

|

|

||||||

登录上之后在网络配置界面添加websocket客户端,名称随便输一个,url改成`ws://127.0.0.1:8080/onebot/v11/ws`保存之后点启用,就大功告成了。

|

登录上之后在网络配置界面添加websocket客户端,名称随便输一个,url改成`ws://127.0.0.1:8080/onebot/v11/ws`保存之后点启用,就大功告成了。

|

||||||

@@ -178,7 +187,7 @@ napcat status #检查运行状态

|

|||||||

|

|

||||||

### step 1 安装解压软件

|

### step 1 安装解压软件

|

||||||

|

|

||||||

```

|

```bash

|

||||||

sudo apt-get install unzip

|

sudo apt-get install unzip

|

||||||

```

|

```

|

||||||

|

|

||||||

@@ -229,138 +238,11 @@ bot

|

|||||||

|

|

||||||

你可以注册一个硅基流动的账号,通过邀请码注册有14块钱的免费额度:https://cloud.siliconflow.cn/i/7Yld7cfg。

|

你可以注册一个硅基流动的账号,通过邀请码注册有14块钱的免费额度:https://cloud.siliconflow.cn/i/7Yld7cfg。

|

||||||

|

|

||||||

#### 在.env.prod中定义API凭证:

|

#### 修改配置文件

|

||||||

|

请参考

|

||||||

|

- [🎀 新手配置指南](./installation_cute.md) - 通俗易懂的配置教程,适合初次使用的猫娘

|

||||||

|

- [⚙️ 标准配置指南](./installation_standard.md) - 简明专业的配置说明,适合有经验的用户

|

||||||

|

|

||||||

```

|

|

||||||

# API凭证配置

|

|

||||||

SILICONFLOW_KEY=your_key # 硅基流动API密钥

|

|

||||||

SILICONFLOW_BASE_URL=https://api.siliconflow.cn/v1/ # 硅基流动API地址

|

|

||||||

|

|

||||||

DEEP_SEEK_KEY=your_key # DeepSeek API密钥

|

|

||||||

DEEP_SEEK_BASE_URL=https://api.deepseek.com/v1 # DeepSeek API地址

|

|

||||||

|

|

||||||

CHAT_ANY_WHERE_KEY=your_key # ChatAnyWhere API密钥

|

|

||||||

CHAT_ANY_WHERE_BASE_URL=https://api.chatanywhere.tech/v1 # ChatAnyWhere API地址

|

|

||||||

```

|

|

||||||

|

|

||||||

#### 在bot_config.toml中引用API凭证:

|

|

||||||

|

|

||||||

```

|

|

||||||

[model.llm_reasoning]

|

|

||||||

name = "Pro/deepseek-ai/DeepSeek-R1"

|

|

||||||

base_url = "SILICONFLOW_BASE_URL" # 引用.env.prod中定义的地址

|

|

||||||

key = "SILICONFLOW_KEY" # 引用.env.prod中定义的密钥

|

|

||||||

```

|

|

||||||

|

|

||||||

如需切换到其他API服务,只需修改引用:

|

|

||||||

|

|

||||||

```

|

|

||||||

[model.llm_reasoning]

|

|

||||||

name = "Pro/deepseek-ai/DeepSeek-R1"

|

|

||||||

base_url = "DEEP_SEEK_BASE_URL" # 切换为DeepSeek服务

|

|

||||||

key = "DEEP_SEEK_KEY" # 使用DeepSeek密钥

|

|

||||||

```

|

|

||||||

|

|

||||||

#### 配置文件详解

|

|

||||||

|

|

||||||

##### 环境配置文件 (.env.prod)

|

|

||||||

|

|

||||||

```

|

|

||||||

# API配置

|

|

||||||

SILICONFLOW_KEY=your_key

|

|

||||||

SILICONFLOW_BASE_URL=https://api.siliconflow.cn/v1/

|

|

||||||

DEEP_SEEK_KEY=your_key

|

|

||||||

DEEP_SEEK_BASE_URL=https://api.deepseek.com/v1

|

|

||||||

CHAT_ANY_WHERE_KEY=your_key

|

|

||||||

CHAT_ANY_WHERE_BASE_URL=https://api.chatanywhere.tech/v1

|

|

||||||

|

|

||||||

# 服务配置

|

|

||||||

HOST=127.0.0.1 # 如果使用Docker部署,需要改成0.0.0.0,否则QQ消息无法传入

|

|

||||||

PORT=8080

|

|

||||||

|

|

||||||

# 数据库配置

|

|

||||||

MONGODB_HOST=127.0.0.1 # 如果使用Docker部署,需要改成数据库容器的名字,默认是mongodb

|

|

||||||

MONGODB_PORT=27017

|

|

||||||

DATABASE_NAME=MegBot

|

|

||||||

MONGODB_USERNAME = "" # 数据库用户名

|

|

||||||

MONGODB_PASSWORD = "" # 数据库密码

|

|

||||||

MONGODB_AUTH_SOURCE = "" # 认证数据库

|

|

||||||

|

|

||||||

# 插件配置

|

|

||||||

PLUGINS=["src2.plugins.chat"]

|

|

||||||

```

|

|

||||||

|

|

||||||

##### 机器人配置文件 (bot_config.toml)

|

|

||||||

|

|

||||||

```

|

|

||||||

[bot]

|

|

||||||

qq = "机器人QQ号" # 必填

|

|

||||||

nickname = "麦麦" # 机器人昵称(你希望机器人怎么称呼它自己)

|

|

||||||

|

|

||||||

[personality]

|

|

||||||

prompt_personality = [

|

|

||||||

"曾经是一个学习地质的女大学生,现在学习心理学和脑科学,你会刷贴吧",

|

|

||||||

"是一个女大学生,你有黑色头发,你会刷小红书"

|

|

||||||

]

|

|

||||||

prompt_schedule = "一个曾经学习地质,现在学习心理学和脑科学的女大学生,喜欢刷qq,贴吧,知乎和小红书"

|

|

||||||

|

|

||||||

[message]

|

|

||||||

min_text_length = 2 # 最小回复长度

|

|

||||||

max_context_size = 15 # 上下文记忆条数

|

|

||||||

emoji_chance = 0.2 # 表情使用概率

|

|

||||||

ban_words = [] # 禁用词列表

|

|

||||||

|

|

||||||

[emoji]

|

|

||||||

auto_save = true # 自动保存表情

|

|

||||||

enable_check = false # 启用表情审核

|

|

||||||

check_prompt = "符合公序良俗"

|

|

||||||

|

|

||||||

[groups]

|

|

||||||

talk_allowed = [] # 允许对话的群号

|

|

||||||

talk_frequency_down = [] # 降低回复频率的群号

|

|

||||||

ban_user_id = [] # 禁止回复的用户QQ号

|

|

||||||

|

|

||||||

[others]

|

|

||||||

enable_advance_output = true # 启用详细日志

|

|

||||||

enable_kuuki_read = true # 启用场景理解

|

|

||||||

|

|

||||||

# 模型配置

|

|

||||||

[model.llm_reasoning] # 推理模型

|

|

||||||

name = "Pro/deepseek-ai/DeepSeek-R1"

|

|

||||||

base_url = "SILICONFLOW_BASE_URL"

|

|

||||||

key = "SILICONFLOW_KEY"

|

|

||||||

|

|

||||||

[model.llm_reasoning_minor] # 轻量推理模型

|

|

||||||

name = "deepseek-ai/DeepSeek-R1-Distill-Qwen-32B"

|

|

||||||

base_url = "SILICONFLOW_BASE_URL"

|

|

||||||

key = "SILICONFLOW_KEY"

|

|

||||||

|

|

||||||

[model.llm_normal] # 对话模型

|

|

||||||

name = "Pro/deepseek-ai/DeepSeek-V3"

|

|

||||||

base_url = "SILICONFLOW_BASE_URL"

|

|

||||||

key = "SILICONFLOW_KEY"

|

|

||||||

|

|

||||||

[model.llm_normal_minor] # 备用对话模型

|

|

||||||

name = "deepseek-ai/DeepSeek-V2.5"

|

|

||||||

base_url = "SILICONFLOW_BASE_URL"

|

|

||||||

key = "SILICONFLOW_KEY"

|

|

||||||

|

|

||||||

[model.vlm] # 图像识别模型

|

|

||||||

name = "deepseek-ai/deepseek-vl2"

|

|

||||||

base_url = "SILICONFLOW_BASE_URL"

|

|

||||||

key = "SILICONFLOW_KEY"

|

|

||||||

|

|

||||||

[model.embedding] # 文本向量模型

|

|

||||||

name = "BAAI/bge-m3"

|

|

||||||

base_url = "SILICONFLOW_BASE_URL"

|

|

||||||

key = "SILICONFLOW_KEY"

|

|

||||||

|

|

||||||

|

|

||||||

[topic.llm_topic]

|

|

||||||

name = "Pro/deepseek-ai/DeepSeek-V3"

|

|

||||||

base_url = "SILICONFLOW_BASE_URL"

|

|

||||||

key = "SILICONFLOW_KEY"

|

|

||||||

```

|

|

||||||

|

|

||||||

**step # 6** 运行

|

**step # 6** 运行

|

||||||

|

|

||||||

@@ -438,7 +320,7 @@ sudo systemctl enable bot.service # 启动bot服务

|

|||||||

sudo systemctl status bot.service # 检查bot服务状态

|

sudo systemctl status bot.service # 检查bot服务状态

|

||||||

```

|

```

|

||||||

|

|

||||||

```

|

```bash

|

||||||

python bot.py

|

python bot.py # 运行麦麦

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|||||||

@@ -6,7 +6,7 @@

|

|||||||

- QQ小号(QQ框架的使用可能导致qq被风控,严重(小概率)可能会导致账号封禁,强烈不推荐使用大号)

|

- QQ小号(QQ框架的使用可能导致qq被风控,严重(小概率)可能会导致账号封禁,强烈不推荐使用大号)

|

||||||

- 可用的大模型API

|

- 可用的大模型API

|

||||||

- 一个AI助手,网上随便搜一家打开来用都行,可以帮你解决一些不懂的问题

|

- 一个AI助手,网上随便搜一家打开来用都行,可以帮你解决一些不懂的问题

|

||||||

- 以下内容假设你对Linux系统有一定的了解,如果觉得难以理解,请直接用Windows系统部署[Windows系统部署指南](./manual_deploy_windows.md)

|

- 以下内容假设你对Linux系统有一定的了解,如果觉得难以理解,请直接用Windows系统部署[Windows系统部署指南](./manual_deploy_windows.md)或[使用Windows一键包部署](https://github.com/MaiM-with-u/MaiBot/releases/tag/EasyInstall-windows)

|

||||||

|

|

||||||

## 你需要知道什么?

|

## 你需要知道什么?

|

||||||

|

|

||||||

@@ -24,6 +24,9 @@

|

|||||||

|

|

||||||

---

|

---

|

||||||

|

|

||||||

|

## 一键部署

|

||||||

|

请下载并运行项目根目录中的run.sh并按照提示安装,部署完成后请参照后续配置指南进行配置

|

||||||

|

|

||||||

## 环境配置

|

## 环境配置

|

||||||

|

|

||||||

### 1️⃣ **确认Python版本**

|

### 1️⃣ **确认Python版本**

|

||||||

@@ -36,17 +39,26 @@ python --version

|

|||||||

python3 --version

|

python3 --version

|

||||||

```

|

```

|

||||||

|

|

||||||

如果版本低于3.9,请更新Python版本。

|

如果版本低于3.9,请更新Python版本,目前建议使用python3.12

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

# Ubuntu/Debian

|

# Debian

|

||||||

sudo apt update

|

sudo apt update

|

||||||

sudo apt install python3.9

|

sudo apt install python3.12

|

||||||

# 如执行了这一步,建议在执行时将python3指向python3.9

|

# Ubuntu

|

||||||

# 更新替代方案,设置 python3.9 为默认的 python3 版本:

|

sudo add-apt-repository ppa:deadsnakes/ppa

|

||||||

sudo update-alternatives --install /usr/bin/python3 python3 /usr/bin/python3.9 1

|

sudo apt update

|

||||||

|

sudo apt install python3.12

|

||||||

|

|

||||||

|

# 执行完以上命令后,建议在执行时将python3指向python3.12

|

||||||

|

# 更新替代方案,设置 python3.12 为默认的 python3 版本:

|

||||||

|

sudo update-alternatives --install /usr/bin/python3 python3 /usr/bin/python3.12 1

|

||||||

sudo update-alternatives --config python3

|

sudo update-alternatives --config python3

|

||||||

```

|

```

|

||||||

|

建议再执行以下命令,使后续运行命令中的`python3`等同于`python`

|

||||||

|

```bash

|

||||||

|

sudo apt install python-is-python3

|

||||||

|

```

|

||||||

|

|

||||||

### 2️⃣ **创建虚拟环境**

|

### 2️⃣ **创建虚拟环境**

|

||||||

|

|

||||||

@@ -73,7 +85,7 @@ pip install -r requirements.txt

|

|||||||

|

|

||||||

### 3️⃣ **安装并启动MongoDB**

|

### 3️⃣ **安装并启动MongoDB**

|

||||||

|

|

||||||

- 安装与启动:Debian参考[官方文档](https://docs.mongodb.com/manual/tutorial/install-mongodb-on-debian/),Ubuntu参考[官方文档](https://docs.mongodb.com/manual/tutorial/install-mongodb-on-ubuntu/)

|

- 安装与启动:请参考[官方文档](https://www.mongodb.com/zh-cn/docs/manual/administration/install-on-linux/#std-label-install-mdb-community-edition-linux),进入后选择自己的系统版本即可

|

||||||

- 默认连接本地27017端口

|

- 默认连接本地27017端口

|

||||||

|

|

||||||

---

|

---

|

||||||

@@ -82,7 +94,11 @@ pip install -r requirements.txt

|

|||||||

|

|

||||||

### 4️⃣ **安装NapCat框架**

|

### 4️⃣ **安装NapCat框架**

|

||||||

|

|

||||||

- 参考[NapCat官方文档](https://www.napcat.wiki/guide/boot/Shell#napcat-installer-linux%E4%B8%80%E9%94%AE%E4%BD%BF%E7%94%A8%E8%84%9A%E6%9C%AC-%E6%94%AF%E6%8C%81ubuntu-20-debian-10-centos9)安装

|

- 执行NapCat的Linux一键使用脚本(支持Ubuntu 20+/Debian 10+/Centos9)

|

||||||

|

```bash

|

||||||

|

curl -o napcat.sh https://nclatest.znin.net/NapNeko/NapCat-Installer/main/script/install.sh && sudo bash napcat.sh

|

||||||

|

```

|

||||||

|

- 如果你不想使用Napcat的脚本安装,可参考[Napcat-Linux手动安装](https://www.napcat.wiki/guide/boot/Shell-Linux-SemiAuto)

|

||||||

|

|

||||||

- 使用QQ小号登录,添加反向WS地址: `ws://127.0.0.1:8080/onebot/v11/ws`

|

- 使用QQ小号登录,添加反向WS地址: `ws://127.0.0.1:8080/onebot/v11/ws`

|

||||||

|

|

||||||

@@ -91,9 +107,17 @@ pip install -r requirements.txt

|

|||||||

## 配置文件设置

|

## 配置文件设置

|

||||||

|

|

||||||

### 5️⃣ **配置文件设置,让麦麦Bot正常工作**

|

### 5️⃣ **配置文件设置,让麦麦Bot正常工作**

|

||||||

|

可先运行一次

|

||||||

- 修改环境配置文件:`.env.prod`

|

```bash

|

||||||

- 修改机器人配置文件:`bot_config.toml`

|

# 在项目目录下操作

|

||||||

|

nb run

|

||||||

|

# 或

|

||||||

|

python3 bot.py

|

||||||

|

```

|

||||||

|

之后你就可以找到`.env.prod`和`bot_config.toml`这两个文件了

|

||||||

|

关于文件内容的配置请参考:

|

||||||

|

- [🎀 新手配置指南](./installation_cute.md) - 通俗易懂的配置教程,适合初次使用的猫娘

|

||||||

|

- [⚙️ 标准配置指南](./installation_standard.md) - 简明专业的配置说明,适合有经验的用户

|

||||||

|

|

||||||

---

|

---

|

||||||

|

|

||||||

|

|||||||

201

docs/manual_deploy_macos.md

Normal file

@@ -0,0 +1,201 @@

|

|||||||

|

# 📦 macOS系统手动部署MaiMbot麦麦指南

|

||||||

|

|

||||||

|

## 准备工作

|

||||||

|

|

||||||

|

- 一台搭载了macOS系统的设备(macOS 12.0 或以上)

|

||||||

|

- QQ小号(QQ框架的使用可能导致qq被风控,严重(小概率)可能会导致账号封禁,强烈不推荐使用大号)

|

||||||

|

- Homebrew包管理器

|

||||||

|

- 如未安装,你可以在https://github.com/Homebrew/brew/releases/latest 找到.pkg格式的安装包

|

||||||

|

- 可用的大模型API

|

||||||

|

- 一个AI助手,网上随便搜一家打开来用都行,可以帮你解决一些不懂的问题

|

||||||

|

- 以下内容假设你对macOS系统有一定的了解,如果觉得难以理解,请直接用Windows系统部署[Windows系统部署指南](./manual_deploy_windows.md)或[使用Windows一键包部署](https://github.com/MaiM-with-u/MaiBot/releases/tag/EasyInstall-windows)

|

||||||

|

- 终端应用(iTerm2等)

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

## 环境配置

|

||||||

|

|

||||||

|

### 1️⃣ **Python环境配置**

|

||||||

|

|

||||||

|

```bash

|

||||||

|

# 检查Python版本(macOS自带python可能为2.7)

|

||||||

|

python3 --version

|

||||||

|

|

||||||

|

# 通过Homebrew安装Python

|

||||||

|

brew install python@3.12

|

||||||

|

|

||||||

|

# 设置环境变量(如使用zsh)

|

||||||

|

echo 'export PATH="/usr/local/opt/python@3.12/bin:$PATH"' >> ~/.zshrc

|

||||||

|

source ~/.zshrc

|

||||||

|

|

||||||

|

# 验证安装

|

||||||

|

python3 --version # 应显示3.12.x

|

||||||

|

pip3 --version # 应关联3.12版本

|

||||||

|

```

|

||||||

|

|

||||||

|

### 2️⃣ **创建虚拟环境**

|

||||||

|

|

||||||

|

```bash

|

||||||

|

# 方法1:使用venv(推荐)

|

||||||

|

python3 -m venv maimbot-venv

|

||||||

|

source maimbot-venv/bin/activate # 激活虚拟环境

|

||||||

|

|

||||||

|

# 方法2:使用conda

|

||||||

|

brew install --cask miniconda

|

||||||

|

conda create -n maimbot python=3.9

|

||||||

|

conda activate maimbot # 激活虚拟环境

|

||||||

|

|

||||||

|

# 安装项目依赖

|

||||||

|

# 请确保已经进入虚拟环境再执行

|

||||||

|

pip install -r requirements.txt

|

||||||

|

```

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

## 数据库配置

|

||||||

|

|

||||||

|

### 3️⃣ **安装MongoDB**

|

||||||

|

|

||||||

|

请参考[官方文档](https://www.mongodb.com/zh-cn/docs/manual/tutorial/install-mongodb-on-os-x/#install-mongodb-community-edition)

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

## NapCat

|

||||||

|

|

||||||

|

### 4️⃣ **安装与配置Napcat**

|

||||||

|

- 安装

|

||||||

|

可以使用Napcat官方提供的[macOS安装工具](https://github.com/NapNeko/NapCat-Mac-Installer/releases/)

|

||||||

|

由于权限问题,补丁过程需要手动替换 package.json,请注意备份原文件~

|

||||||

|

- 配置

|

||||||

|

使用QQ小号登录,添加反向WS地址: `ws://127.0.0.1:8080/onebot/v11/ws`

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

## 配置文件设置

|

||||||

|

|

||||||

|

### 5️⃣ **生成配置文件**

|

||||||

|

可先运行一次

|

||||||

|

```bash

|

||||||

|

# 在项目目录下操作

|

||||||

|

nb run

|

||||||

|

# 或

|

||||||

|

python3 bot.py

|

||||||

|

```

|

||||||

|

|

||||||

|

之后你就可以找到`.env.prod`和`bot_config.toml`这两个文件了

|

||||||

|

|

||||||

|

关于文件内容的配置请参考:

|

||||||

|

- [🎀 新手配置指南](./installation_cute.md) - 通俗易懂的配置教程,适合初次使用的猫娘

|

||||||

|

- [⚙️ 标准配置指南](./installation_standard.md) - 简明专业的配置说明,适合有经验的用户

|

||||||

|

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

## 启动机器人

|

||||||

|

|

||||||

|

### 6️⃣ **启动麦麦机器人**

|

||||||

|

|

||||||

|

```bash

|

||||||

|

# 在项目目录下操作

|

||||||

|

nb run

|

||||||

|

# 或

|

||||||

|

python3 bot.py

|

||||||

|

```

|

||||||

|

|

||||||

|

## 启动管理

|

||||||

|

|

||||||

|

### 7️⃣ **通过launchd管理服务**

|

||||||

|

|

||||||

|

创建plist文件:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

nano ~/Library/LaunchAgents/com.maimbot.plist

|

||||||

|

```

|

||||||

|

|

||||||

|

内容示例(需替换实际路径):

|

||||||

|

|

||||||

|

```xml

|

||||||

|

<?xml version="1.0" encoding="UTF-8"?>

|

||||||

|

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

|

||||||

|

<plist version="1.0">

|

||||||

|

<dict>

|

||||||

|

<key>Label</key>

|

||||||

|

<string>com.maimbot</string>

|

||||||

|

|

||||||

|

<key>ProgramArguments</key>

|

||||||

|

<array>

|

||||||

|

<string>/path/to/maimbot-venv/bin/python</string>

|

||||||

|

<string>/path/to/MaiMbot/bot.py</string>

|

||||||

|

</array>

|

||||||

|

|

||||||

|

<key>WorkingDirectory</key>

|

||||||

|

<string>/path/to/MaiMbot</string>

|

||||||

|

|

||||||

|

<key>StandardOutPath</key>

|

||||||

|

<string>/tmp/maimbot.log</string>

|

||||||

|

<key>StandardErrorPath</key>

|

||||||

|

<string>/tmp/maimbot.err</string>

|

||||||

|

|

||||||

|

<key>RunAtLoad</key>

|

||||||

|

<true/>

|

||||||

|

<key>KeepAlive</key>

|

||||||

|

<true/>

|

||||||

|

</dict>

|

||||||

|

</plist>

|

||||||

|

```

|

||||||

|

|

||||||

|

加载服务:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

launchctl load ~/Library/LaunchAgents/com.maimbot.plist

|

||||||

|

launchctl start com.maimbot

|

||||||

|

```

|

||||||

|

|

||||||

|

查看日志:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

tail -f /tmp/maimbot.log

|

||||||

|

```

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

## 常见问题处理

|

||||||

|

|

||||||

|

1. **权限问题**

|

||||||

|

```bash

|

||||||

|

# 遇到文件权限错误时

|

||||||

|

chmod -R 755 ~/Documents/MaiMbot

|

||||||

|

```

|

||||||

|

|

||||||

|

2. **Python模块缺失**

|

||||||

|

```bash

|

||||||

|

# 确保在虚拟环境中

|

||||||

|

source maimbot-venv/bin/activate # 或 conda 激活

|

||||||

|

pip install --force-reinstall -r requirements.txt

|

||||||

|

```

|

||||||

|

|

||||||

|

3. **MongoDB连接失败**

|

||||||

|

```bash

|

||||||

|

# 检查服务状态

|

||||||

|

brew services list

|

||||||

|

# 重置数据库权限

|

||||||

|

mongosh --eval "db.adminCommand({setFeatureCompatibilityVersion: '5.0'})"

|

||||||

|

```

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

## 系统优化建议

|

||||||

|

|

||||||

|

1. **关闭App Nap**

|

||||||

|

```bash

|

||||||

|

# 防止系统休眠NapCat进程

|

||||||

|

defaults write NSGlobalDomain NSAppSleepDisabled -bool YES

|

||||||

|

```

|

||||||

|

|

||||||

|

2. **电源管理设置**

|

||||||

|

```bash

|

||||||

|

# 防止睡眠影响机器人运行

|

||||||

|

sudo systemsetup -setcomputersleep Never

|

||||||

|

```

|

||||||

|

|

||||||

|

---

|

||||||

|

Before Width: | Height: | Size: 47 KiB After Width: | Height: | Size: 47 KiB |

|

Before Width: | Height: | Size: 13 KiB After Width: | Height: | Size: 13 KiB |

|

Before Width: | Height: | Size: 27 KiB After Width: | Height: | Size: 27 KiB |

|

Before Width: | Height: | Size: 31 KiB After Width: | Height: | Size: 31 KiB |

BIN

docs/pic/MongoDB_Ubuntu_guide.png

Normal file

|

After Width: | Height: | Size: 14 KiB |

BIN

docs/pic/QQ_Download_guide_Linux.png

Normal file

|

After Width: | Height: | Size: 37 KiB |

BIN

docs/pic/linux_beginner_downloadguide.png

Normal file

|

After Width: | Height: | Size: 10 KiB |

|

Before Width: | Height: | Size: 107 KiB After Width: | Height: | Size: 107 KiB |

|

Before Width: | Height: | Size: 208 KiB After Width: | Height: | Size: 208 KiB |

|

Before Width: | Height: | Size: 170 KiB After Width: | Height: | Size: 170 KiB |

|

Before Width: | Height: | Size: 133 KiB After Width: | Height: | Size: 133 KiB |

|

Before Width: | Height: | Size: 27 KiB After Width: | Height: | Size: 27 KiB |

@@ -16,7 +16,7 @@

|

|||||||

|

|

||||||

docker-compose.yml: https://github.com/SengokuCola/MaiMBot/blob/main/docker-compose.yml

|

docker-compose.yml: https://github.com/SengokuCola/MaiMBot/blob/main/docker-compose.yml

|

||||||

下载后打开,将 `services-mongodb-image` 修改为 `mongo:4.4.24`。这是因为最新的 MongoDB 强制要求 AVX 指令集,而群晖似乎不支持这个指令集

|

下载后打开,将 `services-mongodb-image` 修改为 `mongo:4.4.24`。这是因为最新的 MongoDB 强制要求 AVX 指令集,而群晖似乎不支持这个指令集

|

||||||

|

|

||||||

|

|

||||||

bot_config.toml: https://github.com/SengokuCola/MaiMBot/blob/main/template/bot_config_template.toml

|

bot_config.toml: https://github.com/SengokuCola/MaiMBot/blob/main/template/bot_config_template.toml

|

||||||

下载后,重命名为 `bot_config.toml`

|

下载后,重命名为 `bot_config.toml`

|

||||||

@@ -26,13 +26,13 @@ bot_config.toml: https://github.com/SengokuCola/MaiMBot/blob/main/template/bot_c

|

|||||||

下载后,重命名为 `.env.prod`

|

下载后,重命名为 `.env.prod`

|

||||||

将 `HOST` 修改为 `0.0.0.0`,确保 maimbot 能被 napcat 访问

|

将 `HOST` 修改为 `0.0.0.0`,确保 maimbot 能被 napcat 访问

|

||||||

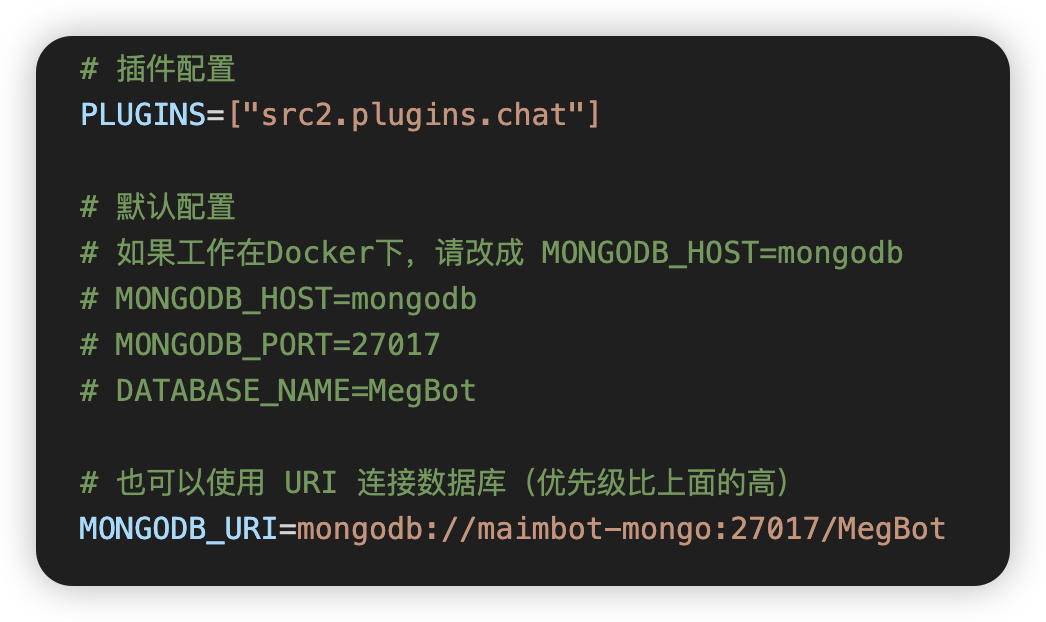

按下图修改 mongodb 设置,使用 `MONGODB_URI`

|

按下图修改 mongodb 设置,使用 `MONGODB_URI`

|

||||||

|

|

||||||

|

|

||||||

把 `bot_config.toml` 和 `.env.prod` 放入之前创建的 `MaiMBot`文件夹

|

把 `bot_config.toml` 和 `.env.prod` 放入之前创建的 `MaiMBot`文件夹

|

||||||

|

|

||||||

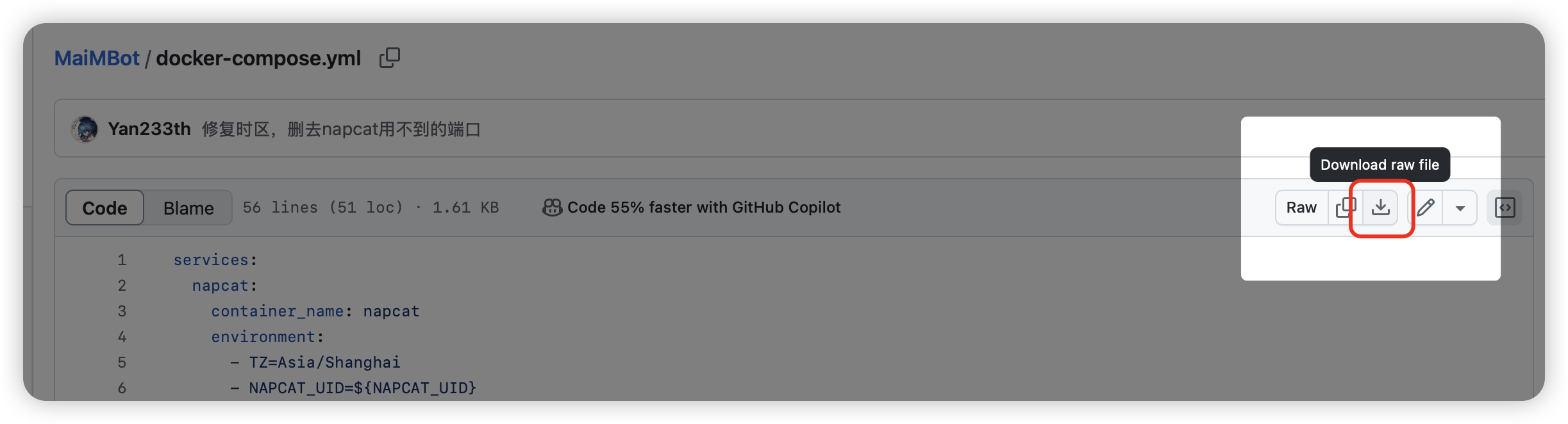

#### 如何下载?

|

#### 如何下载?

|

||||||

|

|

||||||

点这里!

|

点这里!

|

||||||

|

|

||||||

### 创建项目

|

### 创建项目

|

||||||

|

|

||||||

@@ -45,7 +45,7 @@ bot_config.toml: https://github.com/SengokuCola/MaiMBot/blob/main/template/bot_c

|

|||||||

|

|

||||||

图例:

|

图例:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

一路点下一步,等待项目创建完成

|

一路点下一步,等待项目创建完成

|

||||||

|

|

||||||

|

|||||||

@@ -31,9 +31,10 @@ _handler_registry: Dict[str, List[int]] = {}

|

|||||||

current_file_path = Path(__file__).resolve()

|

current_file_path = Path(__file__).resolve()

|

||||||

LOG_ROOT = "logs"

|

LOG_ROOT = "logs"

|

||||||

|

|

||||||

ENABLE_ADVANCE_OUTPUT = False

|

SIMPLE_OUTPUT = os.getenv("SIMPLE_OUTPUT", "false")

|

||||||

|

print(f"SIMPLE_OUTPUT: {SIMPLE_OUTPUT}")

|

||||||

|

|

||||||

if ENABLE_ADVANCE_OUTPUT:

|

if not SIMPLE_OUTPUT:

|

||||||

# 默认全局配置

|

# 默认全局配置

|

||||||

DEFAULT_CONFIG = {

|

DEFAULT_CONFIG = {

|

||||||

# 日志级别配置

|

# 日志级别配置

|

||||||

@@ -85,7 +86,6 @@ MEMORY_STYLE_CONFIG = {

|

|||||||

},

|

},

|

||||||

}

|

}

|

||||||

|

|

||||||

# 海马体日志样式配置

|

|

||||||

SENDER_STYLE_CONFIG = {

|

SENDER_STYLE_CONFIG = {

|

||||||

"advanced": {

|

"advanced": {

|

||||||

"console_format": (

|

"console_format": (

|

||||||

@@ -152,17 +152,17 @@ CHAT_STYLE_CONFIG = {

|

|||||||

"file_format": ("{time:YYYY-MM-DD HH:mm:ss} | {level: <8} | {extra[module]: <15} | 见闻 | {message}"),

|

"file_format": ("{time:YYYY-MM-DD HH:mm:ss} | {level: <8} | {extra[module]: <15} | 见闻 | {message}"),

|

||||||

},

|

},

|

||||||

"simple": {

|

"simple": {

|

||||||

"console_format": ("<green>{time:MM-DD HH:mm}</green> | <light-blue>见闻</light-blue> | {message}"),

|

"console_format": ("<green>{time:MM-DD HH:mm}</green> | <light-blue>见闻</light-blue> | <green>{message}</green>"), # noqa: E501

|

||||||

"file_format": ("{time:YYYY-MM-DD HH:mm:ss} | {level: <8} | {extra[module]: <15} | 见闻 | {message}"),

|

"file_format": ("{time:YYYY-MM-DD HH:mm:ss} | {level: <8} | {extra[module]: <15} | 见闻 | {message}"),

|

||||||

},

|

},

|

||||||

}

|

}

|

||||||

|

|

||||||

# 根据ENABLE_ADVANCE_OUTPUT选择配置

|

# 根据SIMPLE_OUTPUT选择配置

|

||||||

MEMORY_STYLE_CONFIG = MEMORY_STYLE_CONFIG["advanced"] if ENABLE_ADVANCE_OUTPUT else MEMORY_STYLE_CONFIG["simple"]

|

MEMORY_STYLE_CONFIG = MEMORY_STYLE_CONFIG["simple"] if SIMPLE_OUTPUT else MEMORY_STYLE_CONFIG["advanced"]

|

||||||

TOPIC_STYLE_CONFIG = TOPIC_STYLE_CONFIG["advanced"] if ENABLE_ADVANCE_OUTPUT else TOPIC_STYLE_CONFIG["simple"]

|

TOPIC_STYLE_CONFIG = TOPIC_STYLE_CONFIG["simple"] if SIMPLE_OUTPUT else TOPIC_STYLE_CONFIG["advanced"]

|

||||||

SENDER_STYLE_CONFIG = SENDER_STYLE_CONFIG["advanced"] if ENABLE_ADVANCE_OUTPUT else SENDER_STYLE_CONFIG["simple"]

|

SENDER_STYLE_CONFIG = SENDER_STYLE_CONFIG["simple"] if SIMPLE_OUTPUT else SENDER_STYLE_CONFIG["advanced"]

|

||||||

LLM_STYLE_CONFIG = LLM_STYLE_CONFIG["advanced"] if ENABLE_ADVANCE_OUTPUT else LLM_STYLE_CONFIG["simple"]

|

LLM_STYLE_CONFIG = LLM_STYLE_CONFIG["simple"] if SIMPLE_OUTPUT else LLM_STYLE_CONFIG["advanced"]

|

||||||

CHAT_STYLE_CONFIG = CHAT_STYLE_CONFIG["advanced"] if ENABLE_ADVANCE_OUTPUT else CHAT_STYLE_CONFIG["simple"]

|

CHAT_STYLE_CONFIG = CHAT_STYLE_CONFIG["simple"] if SIMPLE_OUTPUT else CHAT_STYLE_CONFIG["advanced"]

|

||||||

|

|

||||||

|

|

||||||

def is_registered_module(record: dict) -> bool:

|

def is_registered_module(record: dict) -> bool:

|

||||||

|

|||||||

@@ -92,12 +92,13 @@ async def _(bot: Bot):

|

|||||||

|

|

||||||

@msg_in.handle()

|

@msg_in.handle()

|

||||||

async def _(bot: Bot, event: MessageEvent, state: T_State):

|

async def _(bot: Bot, event: MessageEvent, state: T_State):

|

||||||

#处理合并转发消息

|

# 处理合并转发消息

|

||||||

if "forward" in event.message:

|

if "forward" in event.message:

|

||||||

await chat_bot.handle_forward_message(event , bot)

|

await chat_bot.handle_forward_message(event, bot)

|

||||||

else :

|

else:

|

||||||

await chat_bot.handle_message(event, bot)

|

await chat_bot.handle_message(event, bot)

|

||||||

|

|

||||||

|

|

||||||

@notice_matcher.handle()

|

@notice_matcher.handle()

|

||||||

async def _(bot: Bot, event: NoticeEvent, state: T_State):

|

async def _(bot: Bot, event: NoticeEvent, state: T_State):

|

||||||

logger.debug(f"收到通知:{event}")

|

logger.debug(f"收到通知:{event}")

|

||||||

@@ -110,7 +111,7 @@ async def build_memory_task():

|

|||||||

"""每build_memory_interval秒执行一次记忆构建"""

|

"""每build_memory_interval秒执行一次记忆构建"""

|

||||||

logger.debug("[记忆构建]------------------------------------开始构建记忆--------------------------------------")

|

logger.debug("[记忆构建]------------------------------------开始构建记忆--------------------------------------")

|

||||||

start_time = time.time()

|

start_time = time.time()

|

||||||

await hippocampus.operation_build_memory(chat_size=20)

|

await hippocampus.operation_build_memory()

|

||||||

end_time = time.time()

|

end_time = time.time()

|

||||||

logger.success(

|

logger.success(

|

||||||

f"[记忆构建]--------------------------记忆构建完成:耗时: {end_time - start_time:.2f} "

|

f"[记忆构建]--------------------------记忆构建完成:耗时: {end_time - start_time:.2f} "

|

||||||

|

|||||||

@@ -154,7 +154,7 @@ class ChatBot:

|

|||||||

)

|

)

|

||||||

# 开始思考的时间点

|

# 开始思考的时间点

|

||||||

thinking_time_point = round(time.time(), 2)

|

thinking_time_point = round(time.time(), 2)

|

||||||

logger.info(f"开始思考的时间点: {thinking_time_point}")

|

# logger.debug(f"开始思考的时间点: {thinking_time_point}")

|

||||||

think_id = "mt" + str(thinking_time_point)

|

think_id = "mt" + str(thinking_time_point)

|

||||||

thinking_message = MessageThinking(

|

thinking_message = MessageThinking(

|

||||||

message_id=think_id,

|

message_id=think_id,

|

||||||

@@ -418,13 +418,12 @@ class ChatBot:

|

|||||||

# 用户屏蔽,不区分私聊/群聊

|

# 用户屏蔽,不区分私聊/群聊

|

||||||

if event.user_id in global_config.ban_user_id:

|

if event.user_id in global_config.ban_user_id:

|

||||||

return

|

return

|

||||||

|

|

||||||

if isinstance(event, GroupMessageEvent):

|

if isinstance(event, GroupMessageEvent):

|

||||||

if event.group_id:

|

if event.group_id:

|

||||||

if event.group_id not in global_config.talk_allowed_groups:

|

if event.group_id not in global_config.talk_allowed_groups:

|

||||||

return

|

return

|

||||||

|

|

||||||

|

|

||||||

# 获取合并转发消息的详细信息

|

# 获取合并转发消息的详细信息

|

||||||

forward_info = await bot.get_forward_msg(message_id=event.message_id)

|

forward_info = await bot.get_forward_msg(message_id=event.message_id)

|

||||||

messages = forward_info["messages"]

|

messages = forward_info["messages"]

|

||||||

@@ -434,17 +433,17 @@ class ChatBot:

|

|||||||

for node in messages:

|

for node in messages:

|

||||||

# 提取发送者昵称

|

# 提取发送者昵称

|

||||||

nickname = node["sender"].get("nickname", "未知用户")

|

nickname = node["sender"].get("nickname", "未知用户")

|

||||||

|

|

||||||

# 递归处理消息内容

|

# 递归处理消息内容

|

||||||

message_content = await self.process_message_segments(node["message"],layer=0)

|

message_content = await self.process_message_segments(node["message"], layer=0)

|

||||||

|

|

||||||

# 拼接为【昵称】+ 内容

|

# 拼接为【昵称】+ 内容

|

||||||

processed_messages.append(f"【{nickname}】{message_content}")

|

processed_messages.append(f"【{nickname}】{message_content}")

|

||||||

|

|

||||||

# 组合所有消息

|

# 组合所有消息

|

||||||

combined_message = "\n".join(processed_messages)

|

combined_message = "\n".join(processed_messages)

|

||||||

combined_message = f"合并转发消息内容:\n{combined_message}"

|

combined_message = f"合并转发消息内容:\n{combined_message}"

|

||||||

|

|

||||||

# 构建用户信息(使用转发消息的发送者)

|

# 构建用户信息(使用转发消息的发送者)

|

||||||

user_info = UserInfo(

|

user_info = UserInfo(

|

||||||

user_id=event.user_id,

|

user_id=event.user_id,

|

||||||

@@ -456,11 +455,7 @@ class ChatBot:

|

|||||||

# 构建群聊信息(如果是群聊)

|

# 构建群聊信息(如果是群聊)

|

||||||

group_info = None

|

group_info = None

|

||||||

if isinstance(event, GroupMessageEvent):

|

if isinstance(event, GroupMessageEvent):

|

||||||

group_info = GroupInfo(

|

group_info = GroupInfo(group_id=event.group_id, group_name=None, platform="qq")

|

||||||

group_id=event.group_id,

|

|

||||||

group_name=None,

|

|

||||||

platform="qq"

|

|

||||||

)

|

|

||||||

|

|

||||||

# 创建消息对象

|

# 创建消息对象

|

||||||

message_cq = MessageRecvCQ(

|

message_cq = MessageRecvCQ(

|

||||||

@@ -475,19 +470,19 @@ class ChatBot:

|

|||||||

# 进入标准消息处理流程

|

# 进入标准消息处理流程

|

||||||

await self.message_process(message_cq)

|

await self.message_process(message_cq)

|

||||||

|

|

||||||

async def process_message_segments(self, segments: list,layer:int) -> str:

|

async def process_message_segments(self, segments: list, layer: int) -> str:

|

||||||

"""递归处理消息段"""

|

"""递归处理消息段"""

|

||||||

parts = []

|

parts = []

|

||||||

for seg in segments:

|

for seg in segments:

|

||||||

part = await self.process_segment(seg,layer+1)

|

part = await self.process_segment(seg, layer + 1)

|

||||||

parts.append(part)

|

parts.append(part)

|

||||||

return "".join(parts)

|

return "".join(parts)

|

||||||

|

|

||||||

async def process_segment(self, seg: dict , layer:int) -> str:

|

async def process_segment(self, seg: dict, layer: int) -> str:

|

||||||

"""处理单个消息段"""

|

"""处理单个消息段"""

|

||||||

seg_type = seg["type"]

|

seg_type = seg["type"]

|

||||||

if layer > 3 :

|

if layer > 3:

|

||||||

#防止有那种100层转发消息炸飞麦麦

|

# 防止有那种100层转发消息炸飞麦麦

|

||||||

return "【转发消息】"

|

return "【转发消息】"

|

||||||

if seg_type == "text":

|

if seg_type == "text":

|

||||||

return seg["data"]["text"]

|

return seg["data"]["text"]

|

||||||

@@ -504,13 +499,14 @@ class ChatBot:

|

|||||||

nested_messages.append("合并转发消息内容:")

|

nested_messages.append("合并转发消息内容:")

|

||||||

for node in nested_nodes:

|

for node in nested_nodes:

|

||||||

nickname = node["sender"].get("nickname", "未知用户")

|

nickname = node["sender"].get("nickname", "未知用户")

|

||||||

content = await self.process_message_segments(node["message"],layer=layer)

|

content = await self.process_message_segments(node["message"], layer=layer)

|

||||||

# nested_messages.append('-' * layer)

|

# nested_messages.append('-' * layer)

|

||||||

nested_messages.append(f"{'--' * layer}【{nickname}】{content}")

|

nested_messages.append(f"{'--' * layer}【{nickname}】{content}")

|

||||||

# nested_messages.append(f"{'--' * layer}合并转发第【{layer}】层结束")

|

# nested_messages.append(f"{'--' * layer}合并转发第【{layer}】层结束")

|

||||||

return "\n".join(nested_messages)

|

return "\n".join(nested_messages)

|

||||||

else:

|

else:

|

||||||

return f"[{seg_type}]"

|

return f"[{seg_type}]"

|

||||||

|

|

||||||

|

|

||||||

# 创建全局ChatBot实例

|

# 创建全局ChatBot实例

|

||||||

chat_bot = ChatBot()

|

chat_bot = ChatBot()

|

||||||

|

|||||||

@@ -56,7 +56,6 @@ class BotConfig:

|

|||||||

llm_reasoning: Dict[str, str] = field(default_factory=lambda: {})

|

llm_reasoning: Dict[str, str] = field(default_factory=lambda: {})

|

||||||

llm_reasoning_minor: Dict[str, str] = field(default_factory=lambda: {})

|

llm_reasoning_minor: Dict[str, str] = field(default_factory=lambda: {})

|

||||||

llm_normal: Dict[str, str] = field(default_factory=lambda: {})

|

llm_normal: Dict[str, str] = field(default_factory=lambda: {})

|

||||||

llm_normal_minor: Dict[str, str] = field(default_factory=lambda: {})

|

|

||||||

llm_topic_judge: Dict[str, str] = field(default_factory=lambda: {})

|

llm_topic_judge: Dict[str, str] = field(default_factory=lambda: {})

|

||||||

llm_summary_by_topic: Dict[str, str] = field(default_factory=lambda: {})

|

llm_summary_by_topic: Dict[str, str] = field(default_factory=lambda: {})

|

||||||

llm_emotion_judge: Dict[str, str] = field(default_factory=lambda: {})

|

llm_emotion_judge: Dict[str, str] = field(default_factory=lambda: {})

|

||||||

@@ -68,9 +67,9 @@ class BotConfig:

|

|||||||

MODEL_V3_PROBABILITY: float = 0.1 # V3模型概率

|

MODEL_V3_PROBABILITY: float = 0.1 # V3模型概率

|

||||||

MODEL_R1_DISTILL_PROBABILITY: float = 0.1 # R1蒸馏模型概率

|

MODEL_R1_DISTILL_PROBABILITY: float = 0.1 # R1蒸馏模型概率

|

||||||

|

|

||||||

enable_advance_output: bool = False # 是否启用高级输出

|

# enable_advance_output: bool = False # 是否启用高级输出

|

||||||

enable_kuuki_read: bool = True # 是否启用读空气功能

|

enable_kuuki_read: bool = True # 是否启用读空气功能

|

||||||

enable_debug_output: bool = False # 是否启用调试输出

|

# enable_debug_output: bool = False # 是否启用调试输出

|

||||||

enable_friend_chat: bool = False # 是否启用好友聊天

|

enable_friend_chat: bool = False # 是否启用好友聊天

|

||||||

|

|

||||||

mood_update_interval: float = 1.0 # 情绪更新间隔 单位秒

|

mood_update_interval: float = 1.0 # 情绪更新间隔 单位秒

|

||||||

@@ -106,6 +105,11 @@ class BotConfig:

|

|||||||

memory_forget_time: int = 24 # 记忆遗忘时间(小时)

|

memory_forget_time: int = 24 # 记忆遗忘时间(小时)

|

||||||

memory_forget_percentage: float = 0.01 # 记忆遗忘比例

|

memory_forget_percentage: float = 0.01 # 记忆遗忘比例

|

||||||

memory_compress_rate: float = 0.1 # 记忆压缩率

|

memory_compress_rate: float = 0.1 # 记忆压缩率

|

||||||

|

build_memory_sample_num: int = 10 # 记忆构建采样数量

|

||||||

|

build_memory_sample_length: int = 20 # 记忆构建采样长度

|

||||||

|

memory_build_distribution: list = field(

|

||||||

|

default_factory=lambda: [4,2,0.6,24,8,0.4]

|

||||||

|

) # 记忆构建分布,参数:分布1均值,标准差,权重,分布2均值,标准差,权重

|

||||||

memory_ban_words: list = field(

|

memory_ban_words: list = field(

|

||||||

default_factory=lambda: ["表情包", "图片", "回复", "聊天记录"]

|

default_factory=lambda: ["表情包", "图片", "回复", "聊天记录"]

|

||||||

) # 添加新的配置项默认值

|

) # 添加新的配置项默认值

|

||||||

@@ -230,7 +234,6 @@ class BotConfig:

|

|||||||

"llm_reasoning",

|

"llm_reasoning",

|

||||||

"llm_reasoning_minor",

|

"llm_reasoning_minor",

|

||||||

"llm_normal",

|

"llm_normal",

|

||||||

"llm_normal_minor",

|

|

||||||

"llm_topic_judge",

|

"llm_topic_judge",

|

||||||

"llm_summary_by_topic",

|

"llm_summary_by_topic",

|

||||||

"llm_emotion_judge",

|

"llm_emotion_judge",

|

||||||

@@ -315,6 +318,20 @@ class BotConfig:

|

|||||||

"memory_forget_percentage", config.memory_forget_percentage

|

"memory_forget_percentage", config.memory_forget_percentage

|

||||||

)

|

)

|

||||||

config.memory_compress_rate = memory_config.get("memory_compress_rate", config.memory_compress_rate)

|

config.memory_compress_rate = memory_config.get("memory_compress_rate", config.memory_compress_rate)

|

||||||

|

if config.INNER_VERSION in SpecifierSet(">=0.0.11"):

|

||||||

|

config.memory_build_distribution = memory_config.get(

|

||||||

|

"memory_build_distribution",

|

||||||

|

config.memory_build_distribution

|

||||||

|

)

|

||||||

|

config.build_memory_sample_num = memory_config.get(

|

||||||

|

"build_memory_sample_num",

|

||||||

|

config.build_memory_sample_num

|

||||||

|

)

|

||||||

|

config.build_memory_sample_length = memory_config.get(

|

||||||

|

"build_memory_sample_length",

|

||||||

|

config.build_memory_sample_length

|

||||||

|

)

|

||||||

|

|

||||||

|

|

||||||

def remote(parent: dict):

|

def remote(parent: dict):

|

||||||

remote_config = parent["remote"]

|

remote_config = parent["remote"]

|

||||||

@@ -351,10 +368,10 @@ class BotConfig:

|

|||||||

|

|

||||||

def others(parent: dict):

|

def others(parent: dict):

|

||||||

others_config = parent["others"]

|

others_config = parent["others"]

|

||||||

config.enable_advance_output = others_config.get("enable_advance_output", config.enable_advance_output)

|

# config.enable_advance_output = others_config.get("enable_advance_output", config.enable_advance_output)

|

||||||

config.enable_kuuki_read = others_config.get("enable_kuuki_read", config.enable_kuuki_read)

|

config.enable_kuuki_read = others_config.get("enable_kuuki_read", config.enable_kuuki_read)

|

||||||

if config.INNER_VERSION in SpecifierSet(">=0.0.7"):

|

if config.INNER_VERSION in SpecifierSet(">=0.0.7"):

|

||||||

config.enable_debug_output = others_config.get("enable_debug_output", config.enable_debug_output)

|

# config.enable_debug_output = others_config.get("enable_debug_output", config.enable_debug_output)

|

||||||

config.enable_friend_chat = others_config.get("enable_friend_chat", config.enable_friend_chat)

|

config.enable_friend_chat = others_config.get("enable_friend_chat", config.enable_friend_chat)

|

||||||

|

|

||||||

# 版本表达式:>=1.0.0,<2.0.0

|

# 版本表达式:>=1.0.0,<2.0.0

|

||||||

|

|||||||

@@ -38,9 +38,9 @@ class EmojiManager:

|

|||||||

|

|

||||||

def __init__(self):

|

def __init__(self):

|

||||||

self._scan_task = None

|

self._scan_task = None

|

||||||

self.vlm = LLM_request(model=global_config.vlm, temperature=0.3, max_tokens=1000, request_type="image")

|

self.vlm = LLM_request(model=global_config.vlm, temperature=0.3, max_tokens=1000, request_type="emoji")

|

||||||

self.llm_emotion_judge = LLM_request(

|

self.llm_emotion_judge = LLM_request(

|

||||||

model=global_config.llm_emotion_judge, max_tokens=600, temperature=0.8, request_type="image"

|

model=global_config.llm_emotion_judge, max_tokens=600, temperature=0.8, request_type="emoji"

|

||||||

) # 更高的温度,更少的token(后续可以根据情绪来调整温度)

|

) # 更高的温度,更少的token(后续可以根据情绪来调整温度)

|

||||||

|

|

||||||

def _ensure_emoji_dir(self):

|

def _ensure_emoji_dir(self):

|

||||||

@@ -111,7 +111,7 @@ class EmojiManager:

|

|||||||

if not text_for_search:

|

if not text_for_search:

|

||||||

logger.error("无法获取文本的情绪")

|

logger.error("无法获取文本的情绪")

|

||||||

return None

|

return None

|

||||||

text_embedding = await get_embedding(text_for_search)

|

text_embedding = await get_embedding(text_for_search, request_type="emoji")

|

||||||

if not text_embedding:

|

if not text_embedding:

|

||||||

logger.error("无法获取文本的embedding")

|

logger.error("无法获取文本的embedding")

|

||||||

return None

|

return None

|

||||||

@@ -242,7 +242,33 @@ class EmojiManager:

|

|||||||

image_hash = hashlib.md5(image_bytes).hexdigest()

|

image_hash = hashlib.md5(image_bytes).hexdigest()

|

||||||

image_format = Image.open(io.BytesIO(image_bytes)).format.lower()

|

image_format = Image.open(io.BytesIO(image_bytes)).format.lower()

|

||||||

# 检查是否已经注册过

|

# 检查是否已经注册过

|

||||||

existing_emoji = db["emoji"].find_one({"hash": image_hash})

|

existing_emoji_by_path = db["emoji"].find_one({"filename": filename})

|

||||||

|

existing_emoji_by_hash = db["emoji"].find_one({"hash": image_hash})

|

||||||

|

if existing_emoji_by_path and existing_emoji_by_hash:

|

||||||

|

if existing_emoji_by_path["_id"] != existing_emoji_by_hash["_id"]:

|

||||||

|

logger.error(f"[错误] 表情包已存在但记录不一致: {filename}")

|

||||||

|

db.emoji.delete_one({"_id": existing_emoji_by_path["_id"]})

|

||||||

|

db.emoji.update_one(

|

||||||

|

{"_id": existing_emoji_by_hash["_id"]}, {"$set": {"path": image_path, "filename": filename}}

|

||||||

|

)

|

||||||

|

existing_emoji_by_hash["path"] = image_path

|

||||||

|

existing_emoji_by_hash["filename"] = filename

|

||||||

|

existing_emoji = existing_emoji_by_hash

|

||||||

|

elif existing_emoji_by_hash:

|

||||||

|

logger.error(f"[错误] 表情包hash已存在但path不存在: {filename}")

|

||||||

|

db.emoji.update_one(

|

||||||

|

{"_id": existing_emoji_by_hash["_id"]}, {"$set": {"path": image_path, "filename": filename}}

|

||||||

|

)

|

||||||

|

existing_emoji_by_hash["path"] = image_path

|

||||||

|

existing_emoji_by_hash["filename"] = filename

|

||||||

|

existing_emoji = existing_emoji_by_hash

|

||||||

|

elif existing_emoji_by_path:

|

||||||

|

logger.error(f"[错误] 表情包path已存在但hash不存在: {filename}")

|

||||||

|

db.emoji.delete_one({"_id": existing_emoji_by_path["_id"]})

|

||||||

|

existing_emoji = None

|

||||||

|

else:

|

||||||

|

existing_emoji = None

|

||||||

|

|

||||||

description = None

|

description = None

|

||||||

|

|

||||||

if existing_emoji:

|

if existing_emoji:

|

||||||

@@ -284,7 +310,7 @@ class EmojiManager:

|

|||||||

logger.info(f"[检查] 表情包检查通过: {check}")

|

logger.info(f"[检查] 表情包检查通过: {check}")

|

||||||

|

|

||||||

if description is not None:

|

if description is not None:

|

||||||

embedding = await get_embedding(description)

|

embedding = await get_embedding(description, request_type="emoji")

|

||||||

# 准备数据库记录

|

# 准备数据库记录

|

||||||

emoji_record = {

|

emoji_record = {

|

||||||

"filename": filename,

|

"filename": filename,

|

||||||

@@ -366,6 +392,12 @@ class EmojiManager:

|

|||||||

logger.warning(f"[检查] 发现缺失记录(缺少hash字段),ID: {emoji.get('_id', 'unknown')}")

|

logger.warning(f"[检查] 发现缺失记录(缺少hash字段),ID: {emoji.get('_id', 'unknown')}")

|

||||||

hash = hashlib.md5(open(emoji["path"], "rb").read()).hexdigest()

|

hash = hashlib.md5(open(emoji["path"], "rb").read()).hexdigest()

|

||||||

db.emoji.update_one({"_id": emoji["_id"]}, {"$set": {"hash": hash}})

|

db.emoji.update_one({"_id": emoji["_id"]}, {"$set": {"hash": hash}})

|

||||||

|

else:

|

||||||

|

file_hash = hashlib.md5(open(emoji["path"], "rb").read()).hexdigest()

|

||||||

|

if emoji["hash"] != file_hash:

|

||||||

|

logger.warning(f"[检查] 表情包文件hash不匹配,ID: {emoji.get('_id', 'unknown')}")

|

||||||

|

db.emoji.delete_one({"_id": emoji["_id"]})

|

||||||

|

removed_count += 1

|

||||||

|

|

||||||

except Exception as item_error:

|

except Exception as item_error:

|

||||||

logger.error(f"[错误] 处理表情包记录时出错: {str(item_error)}")

|

logger.error(f"[错误] 处理表情包记录时出错: {str(item_error)}")

|

||||||

|

|||||||

@@ -32,11 +32,19 @@ class ResponseGenerator:

|

|||||||

temperature=0.7,

|

temperature=0.7,

|

||||||

max_tokens=1000,

|

max_tokens=1000,

|

||||||

stream=True,

|

stream=True,

|

||||||

|

request_type="response",

|

||||||

|

)

|

||||||

|

self.model_v3 = LLM_request(

|

||||||

|

model=global_config.llm_normal, temperature=0.7, max_tokens=3000, request_type="response"

|

||||||

|

)

|

||||||

|

self.model_r1_distill = LLM_request(

|

||||||

|

model=global_config.llm_reasoning_minor, temperature=0.7, max_tokens=3000, request_type="response"

|

||||||

|

)

|

||||||

|

self.model_sum = LLM_request(

|

||||||

|

model=global_config.llm_summary_by_topic, temperature=0.7, max_tokens=3000, request_type="relation"

|

||||||

)

|

)

|

||||||

self.model_v3 = LLM_request(model=global_config.llm_normal, temperature=0.7, max_tokens=3000)

|

|

||||||

self.model_r1_distill = LLM_request(model=global_config.llm_reasoning_minor, temperature=0.7, max_tokens=3000)

|

|

||||||

self.model_v25 = LLM_request(model=global_config.llm_normal_minor, temperature=0.7, max_tokens=3000)

|

|

||||||

self.current_model_type = "r1" # 默认使用 R1

|

self.current_model_type = "r1" # 默认使用 R1

|

||||||

|

self.current_model_name = "unknown model"

|

||||||

|

|

||||||

async def generate_response(self, message: MessageThinking) -> Optional[Union[str, List[str]]]:

|

async def generate_response(self, message: MessageThinking) -> Optional[Union[str, List[str]]]:

|

||||||

"""根据当前模型类型选择对应的生成函数"""

|

"""根据当前模型类型选择对应的生成函数"""

|

||||||

@@ -107,7 +115,7 @@ class ResponseGenerator:

|

|||||||

|

|

||||||

# 生成回复

|

# 生成回复

|

||||||

try:

|

try:

|

||||||

content, reasoning_content = await model.generate_response(prompt)

|

content, reasoning_content, self.current_model_name = await model.generate_response(prompt)

|

||||||

except Exception:

|

except Exception:

|

||||||

logger.exception("生成回复时出错")

|

logger.exception("生成回复时出错")

|

||||||

return None

|

return None

|

||||||

@@ -144,7 +152,7 @@ class ResponseGenerator:

|

|||||||

"chat_id": message.chat_stream.stream_id,

|

"chat_id": message.chat_stream.stream_id,

|

||||||

"user": sender_name,

|

"user": sender_name,

|

||||||

"message": message.processed_plain_text,

|

"message": message.processed_plain_text,

|

||||||

"model": self.current_model_type,

|

"model": self.current_model_name,

|

||||||

# 'reasoning_check': reasoning_content_check,

|

# 'reasoning_check': reasoning_content_check,

|

||||||

# 'response_check': content_check,

|

# 'response_check': content_check,

|

||||||

"reasoning": reasoning_content,

|

"reasoning": reasoning_content,

|

||||||

@@ -174,7 +182,7 @@ class ResponseGenerator:

|

|||||||

"""

|

"""

|

||||||

|

|

||||||

# 调用模型生成结果

|

# 调用模型生成结果

|

||||||

result, _ = await self.model_v25.generate_response(prompt)

|

result, _, _ = await self.model_sum.generate_response(prompt)

|

||||||

result = result.strip()

|

result = result.strip()

|

||||||

|

|

||||||

# 解析模型输出的结果

|

# 解析模型输出的结果

|

||||||

@@ -215,7 +223,7 @@ class InitiativeMessageGenerate:

|

|||||||

topic_select_prompt, dots_for_select, prompt_template = prompt_builder._build_initiative_prompt_select(

|